After installing Debian buster on my GnuBee, I set it up for receiving backups from my other computers.

Software setup

I started by configuring it like a typical server but without a few packages that either take a lot of memory or CPU:

I changed the default hostname:

/etc/hostname:foobar/etc/mailname:foobar.example.com/etc/hosts:127.0.0.1 foobar.example.com foobar localhost

and then installed the avahi-daemon package to be able to reach this box

using foobar.local.

I noticed the presence of a world-writable

directory and so I

tightened the security of some of the default mount points by putting the following

in /etc/rc.local:

chmod 755 /etc/network

exit 0

Hardware setup

My OS drive (/dev/sda) is a small SSD so that the GnuBee can run silently when the

spinning disks aren't needed. To hold the backup data on the other hand, I

got three 4-TB drives drives which I setup in a

RAID-5 array.

If the data were valuable, I'd use

RAID-6 instead

since it can survive two drives failing at the same time, but in this case

since it's only holding backups, I'd have to lose the original machine at

the same time as two of the 3 drives, a very unlikely scenario.

I created new gpt partition tables on /dev/sdb, /dev/sdbc, /dev/sdd

and used fdisk to create a single partition of type 29 (Linux RAID) on

each of them.

Then I created the RAID array:

mdadm /dev/md127 --create -n 3 --level=raid5 /dev/sdb1 /dev/sdc1 /dev/sdd1

and waited more than 24 hours for that operation to finish. Next, I formatted the array:

mkfs.ext4 -m 0 /dev/md127

and added the following to /etc/fstab:

/dev/md127 /mnt/data/ ext4 noatime,nodiratime 0 2

Keeping a copy of the root partition

In order to survive a failing SSD drive, I could have bought a second SSD and gone for a RAID-1 setup. Instead, I went for a cheaper option, a poor man's RAID-1, where I will have to reinstall the machine but it will be very quick and I won't lose any of my configuration.

The way that it works is that I periodically sync the contents of the root

partition onto the RAID-5 array using a cronjob in /etc/cron.d/hdd-sync:

0 10 * * * root /usr/local/sbin/ssd_root_backup

which runs the /usr/local/sbin/ssd_root_backup script:

#!/bin/sh

nice ionice -c3 rsync -aHx --delete --exclude=/dev/* --exclude=/proc/* --exclude=/sys/* --exclude=/tmp/* --exclude=/mnt/* --exclude=/lost+found/* --exclude=/media/* --exclude=/var/tmp/* /* /mnt/data/root/

Drive spin down

To reduce unnecessary noise and reduce power consumption, I also installed hdparm:

apt install hdparm

and configured all spinning drives to spin down after being idle for 2

minutes and for maximum power saving by putting the following in /etc/hdparm.conf:

/dev/sdb {

apm = 1

spindown_time = 24

}

/dev/sdc {

apm = 1

spindown_time = 24

}

/dev/sdd {

apm = 1

spindown_time = 24

}

and then reloaded the configuration:

/usr/lib/pm-utils/power.d/95hdparm-apm resume

Monitoring drive health

Finally I setup smartmontools by putting

the following in /etc/smartd.conf:

/dev/sda -a -o on -S on -s (S/../.././02|L/../../6/03)

/dev/sdb -a -o on -S on -s (S/../.././02|L/../../6/03)

/dev/sdc -a -o on -S on -s (S/../.././02|L/../../6/03)

/dev/sdd -a -o on -S on -s (S/../.././02|L/../../6/03)

and restarting the daemon:

systemctl restart smartd.service

Some of these errors reported by this tool are good predictors of imminent failure.

Backup setup

I started by using duplicity since I have been using that tool for many years, but a 190GB backup took around 15 hours on the GnuBee with gigabit ethernet.

After a friend suggested it, I took a look at restic and I have to say that I am impressed. The same backup finished in about half the time.

User and ssh setup

After hardening the ssh setup as I usually do, I created a user account for each machine needing to backup onto the GnuBee:

adduser machine1

adduser machine1 sshuser

adduser machine1 sftponly

chsh machine1 -s /bin/false

and then matching directories under /mnt/data/home/:

mkdir /mnt/data/home/machine1

chown machine1:machine1 /mnt/data/home/machine1

chmod 700 /mnt/data/home/machine1

Then I created a custom passwordless ssh key for each machine:

ssh-keygen -f /root/.ssh/foobar_backups -t ed25519

and placed it in /home/machine1/.ssh/authorized_keys on the GnuBee.

Then I added the restrict prefix in front of that key so that it looked like:

restrict ssh-ed25519 AAAAC3N... root@machine1

On each machine, I added the following to /root/.ssh/config:

Host foobar.local

User machine1

Compression no

Ciphers aes128-ctr

IdentityFile /root/backup/foobar_backups

IdentitiesOnly yes

ServerAliveInterval 60

ServerAliveCountMax 240

The reason for setting the ssh cipher and disabling compression is to speed up the ssh connection as much as possible given that the GnuBee has a very small RAM bandwidth.

Another performance-related change I made on the GnuBee was switching to the internal sftp

server

by putting the following in /etc/ssh/sshd_config:

Subsystem sftp internal-sftp

Restic script

After reading through the excellent restic documentation, I wrote the following backup script, based on my old duplicity script, to reuse on all of my computers:

# Configure for each host

PASSWORD="XXXX" # use `pwgen -s 64` to generate a good random password

BACKUP_HOME="/root/backup"

REMOTE_URL="sftp:foobar.local:"

RETENTION_POLICY="--keep-daily 7 --keep-weekly 4 --keep-monthly 12 --keep-yearly 2"

# Internal variables

SSH_IDENTITY="IdentityFile=$BACKUP_HOME/foobar_backups"

EXCLUDE_FILE="$BACKUP_HOME/exclude"

PKG_FILE="$BACKUP_HOME/dpkg-selections"

PARTITION_FILE="$BACKUP_HOME/partitions"

# If the list of files has been requested, only do that

if [ "$1" = "--list-current-files" ]; then

RESTIC_PASSWORD=$PASSWORD restic --quiet -r $REMOTE_URL ls latest

exit 0

# Show list of available snapshots

elif [ "$1" = "--list-snapshots" ]; then

RESTIC_PASSWORD=$GPG_PASSWORD restic --quiet -r $REMOTE_URL snapshots

exit 0

# Restore the given file

elif [ "$1" = "--file-to-restore" ]; then

if [ "$2" = "" ]; then

echo "You must specify a file to restore"

exit 2

fi

RESTORE_DIR="$(mktemp -d ./restored_XXXXXXXX)"

RESTIC_PASSWORD=$PASSWORD restic --quiet -r $REMOTE_URL restore latest --target "$RESTORE_DIR" --include "$2" || exit 1

echo "$2 was restored to $RESTORE_DIR"

exit 0

# Delete old backups

elif [ "$1" = "--prune" ]; then

# Expire old backups

RESTIC_PASSWORD=$PASSWORD restic --quiet -r $REMOTE_URL forget $RETENTION_POLICY

# Delete files which are no longer necessary (slow)

RESTIC_PASSWORD=$PASSWORD restic --quiet -r $REMOTE_URL prune

exit 0

# Unlock the repository

elif [ "$1" = "--unlock" ]; then

RESTIC_PASSWORD=$PASSWORD restic -r $REMOTE_URL unlock

exit 0

# Catch invalid arguments

elif [ "$1" != "" ]; then

echo "Invalid argument: $1"

exit 1

fi

# Check the integrity of existing backups

CHECK_CACHE_DIR="$(mktemp -d /var/tmp/restic-check-XXXXXXXX)"

RESTIC_PASSWORD=$PASSWORD restic --quiet --cache-dir=$CHECK_CACHE_DIR -r $REMOTE_URL check || exit 1

rmdir "$CHECK_CACHE_DIR"

# Dump list of Debian packages

dpkg --get-selections > $PKG_FILE

# Dump partition tables from harddrives

/sbin/fdisk -l /dev/sda > $PARTITION_FILE

/sbin/fdisk -l /dev/sdb > $PARTITION_FILE

# Do the actual backup

RESTIC_PASSWORD=$PASSWORD restic --quiet --cleanup-cache -r $REMOTE_URL backup / --exclude-file $EXCLUDE_FILE

I run it with the following cronjob in /etc/cron.d/backups:

30 8 * * * root ionice nice /root/backup/backup-machine1-to-foobar

30 2 * * Sun root ionice nice /root/backup/backup-machine1-to-foobar --prune

in a way that doesn't impact the rest of the system too much.

I also put the following in my /etc/rc.local to cleanup any leftover temp

directories for aborted backups:

rmdir --ignore-fail-on-non-empty /var/tmp/restic-check-*

Finally, I printed a copy of each of my backup script, using enscript, to stash in a safe place:

enscript --highlight=bash --style=emacs --output=- backup-machine1-to-foobar | ps2pdf - > foobar.pdf

This is actually a pretty important step since without the password, you won't be able to decrypt and restore what's on the GnuBee.

If you use a Dynamic DNS setup to reach machines which are not behind a stable IP address, you will likely have a need to probe these machines' public IP addresses. One option is to use an insecure service like Oracle's http://checkip.dyndns.com/ which echoes back your client IP, but you can also do this on your own server if you have one.

There are multiple options to do this, like writing a CGI or PHP script, but those are fairly heavyweight if that's all you need mod_cgi or PHP for. Instead, I decided to use Apache's built-in Server-Side Includes.

Apache configuration

Start by turning on the include

filter by

adding the following in /etc/apache2/conf-available/ssi.conf:

AddType text/html .shtml

AddOutputFilter INCLUDES .shtml

and making that configuration file active:

a2enconf ssi

Then, find the vhost file where you want to enable SSI and add the following

options to a Location or Directory section:

<Location /ssi_files>

Options +IncludesNOEXEC

SSLRequireSSL

Header set Content-Security-Policy: "default-src 'none'"

Header set X-Content-Type-Options: "nosniff"

Header set Cache-Control "max-age=0, no-cache, no-store, must-revalidate"

</Location>

before adding the necessary modules:

a2enmod headers

a2enmod include

and restarting Apache:

apache2ctl configtest && systemctl restart apache2.service

Create an shtml page

With the web server ready to process SSI instructions, the following HTML blurb can be used to display the client IP address:

<!--#echo var="REMOTE_ADDR" -->

or any other built-in variable.

Note that you don't need to write a valid HTML for the variable to be substituted and so the above one-liner is all I use on my server.

Security concerns

The first thing to note is that the configuration section uses the

IncludesNOEXEC option in order to disable arbitrary command

execution via

SSI. In addition, you can also make sure that the cgi module is disabled

since that's a dependency of the more dangerous side of SSI:

a2dismod cgi

Of course, if you rely on this IP address to be accurate, for example

because you'll be putting it in your DNS, then you should make sure that you

only serve this page over HTTPS, which can be enforced via the

SSLRequireSSL

directive.

I included two other headers in the above vhost config

(Content-Security-Policy

and

X-Content-Type-Options)

in order to limit the damage that could be done in case a malicious file was

accidentally dropped in that directory.

Finally, I suggest making sure that only the root user has writable

access to the directory which has server-side includes enabled:

$ ls -la /var/www/ssi_includes/

total 12

drwxr-xr-x 2 root root 4096 May 18 15:58 .

drwxr-xr-x 16 root root 4096 May 18 15:40 ..

-rw-r--r-- 1 root root 0 May 18 15:46 index.html

-rw-r--r-- 1 root root 32 May 18 15:58 whatsmyip.shtml

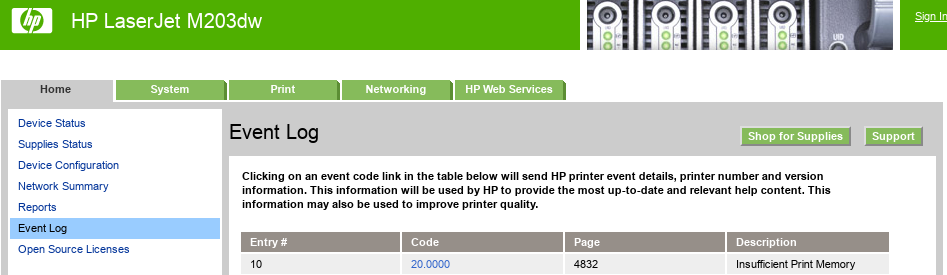

I recently found a few PDFs which I was unable to print due to those files causing insufficient printer memory errors:

I found a detailed explanation of what might be causing this which pointed the finger at transparent images, a PDF 1.4 feature which apparently requires a more recent version of PostScript than what my printer supports.

Using Okular's Force rasterization option (accessible via the print dialog) does work by essentially rendering everything ahead of time and outputing a big image to be sent to the printer. The quality is not very good however.

Converting a PDF to DjVu

The best solution I found makes use of a different file format: .djvu

Such files are not PDFs, but can still be opened in Evince and Okular, as well as in the dedicated DjVuLibre application.

As an example, I was unable to print page 11 of this

paper. Using pdfinfo, I found that

it is in PDF 1.5 format and so the transparency effects could be the cause

of the out-of-memory printer error.

Here's how I converted it to a high-quality DjVu file I could print without problems using Evince:

pdf2djvu -d 1200 2002.04049.pdf > 2002.04049-1200dpi.djvu

Converting a PDF to PDF 1.3

I also tried the DjVu trick on a different unprintable PDF, but it failed to print, even after lowering the resolution to 600dpi:

pdf2djvu -d 600 dow-faq_v1.1.pdf > dow-faq_v1.1-600dpi.djvu

In this case, I used a different technique and simply converted the PDF to

version 1.3 (from version 1.6 according to pdfinfo):

ps2pdf13 -r1200x1200 dow-faq_v1.1.pdf dow-faq_v1.1-1200dpi.pdf

This eliminates the problematic transparency and rasterizes the elements that version 1.3 doesn't support.

After upgrading to MythTV 30, I noticed that the interface of mythfrontend

switched from the French language to English, despite having the following

in my ~/.xsession for the mythtv user:

export LANG=fr_CA.UTF-8

exec ~/bin/start_mythtv

I noticed a few related error messages in /var/log/syslog:

mythbackend[6606]: I CoreContext mythcorecontext.cpp:272 (Init) Assumed character encoding: fr_CA.UTF-8

mythbackend[6606]: N CoreContext mythcorecontext.cpp:1780 (InitLocale) Setting QT default locale to FR_US

mythbackend[6606]: I CoreContext mythcorecontext.cpp:1813 (SaveLocaleDefaults) Current locale FR_US

mythbackend[6606]: E CoreContext mythlocale.cpp:110 (LoadDefaultsFromXML) No locale defaults file for FR_US, skipping

mythpreviewgen[9371]: N CoreContext mythcorecontext.cpp:1780 (InitLocale) Setting QT default locale to FR_US

mythpreviewgen[9371]: I CoreContext mythcorecontext.cpp:1813 (SaveLocaleDefaults) Current locale FR_US

mythpreviewgen[9371]: E CoreContext mythlocale.cpp:110 (LoadDefaultsFromXML) No locale defaults file for FR_US, skipping

Searching for that non-existent fr_US locale, I found that others have

this in their logs

and that it's apparently set by

QT

as a combination of the language and country codes.

I therefore looked in the database and found the following:

MariaDB [mythconverg]> SELECT value, data FROM settings WHERE value = 'Language';

+----------+------+

| value | data |

+----------+------+

| Language | FR |

+----------+------+

1 row in set (0.000 sec)

MariaDB [mythconverg]> SELECT value, data FROM settings WHERE value = 'Country';

+---------+------+

| value | data |

+---------+------+

| Country | US |

+---------+------+

1 row in set (0.000 sec)

which explains the non-sensical FR-US locale.

I fixed the country setting like this

MariaDB [mythconverg]> UPDATE settings SET data = 'CA' WHERE value = 'Country';

Query OK, 1 row affected (0.093 sec)

Rows matched: 1 Changed: 1 Warnings: 0

After logging out and logging back in, the user interface of the frontend is now

using the fr_CA locale again and the database setting looks good:

MariaDB [mythconverg]> SELECT value, data FROM settings WHERE value = 'Country';

+---------+------+

| value | data |

+---------+------+

| Country | CA |

+---------+------+

1 row in set (0.000 sec)